CERN70: Preparing for the future

12 December 2024 · Voir en français

Part 23, the final part of the CERN70 feature series. Find out more: cern70.cern

Gian Giudice is Head of CERN’s Theoretical Physics department

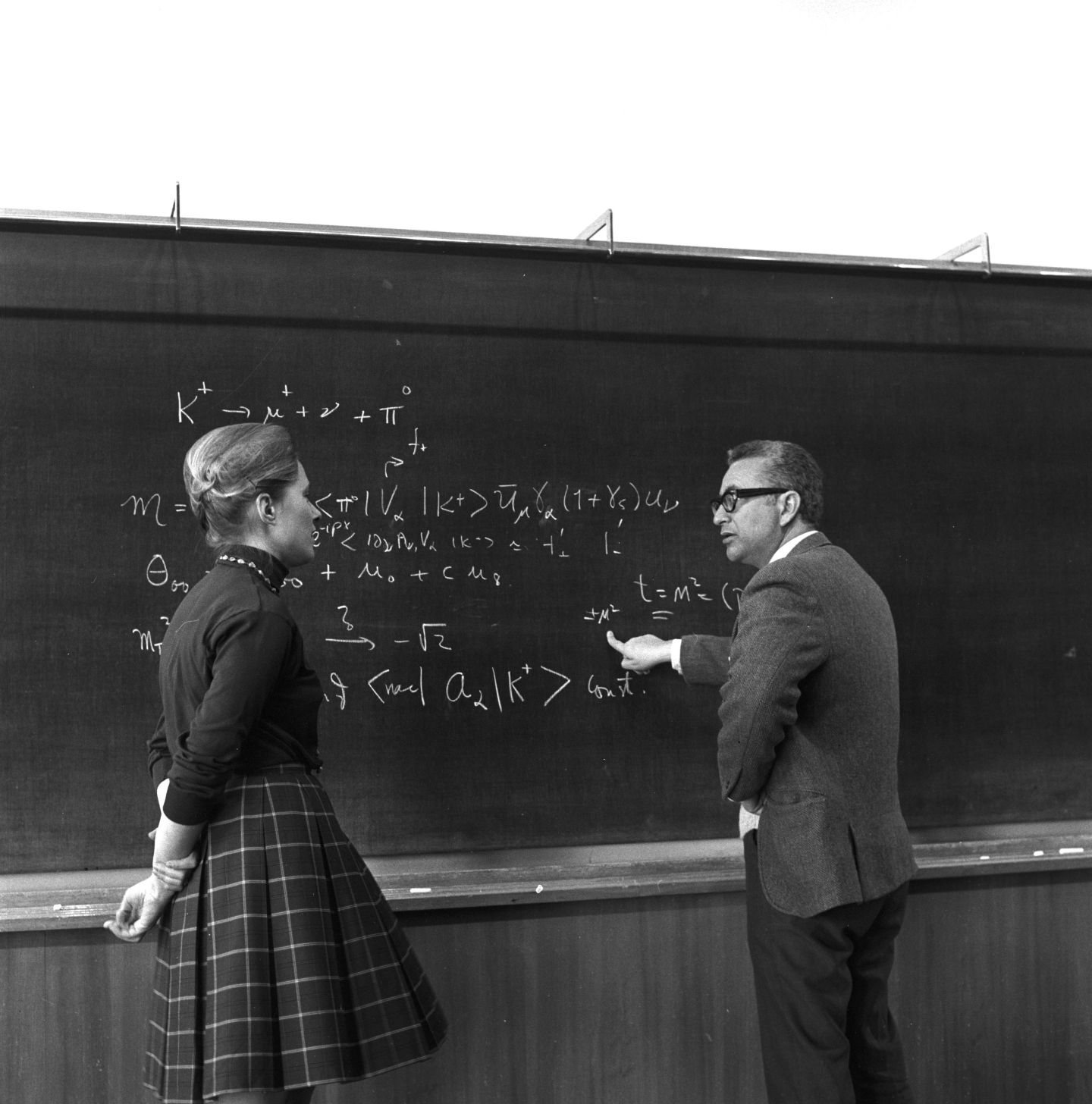

Advancements in fundamental knowledge have always been driven by a dialogue between theory and experiment. Theory sometimes opens up new avenues of exploration, which experimentalists then venture into and either validate them or, on the contrary, find a new direction for their research. Experimentalists sometimes produce a completely unexpected result that requires an explanation, a theoretical framework.

From CERN’s earliest days, its founders recognised the importance of theory for experimental physics. The Theory group was one of the first to be created. It was set up in May 1952, under the leadership of the Danish Nobel laureate in physics Niels Bohr, one of the founding fathers of quantum mechanics. Initially located at Bohr’s Institute of Theoretical Physics in Copenhagen, it moved to Geneva in 1957.

At that time, the landscape of fundamental physics was evolving rapidly. Experiments were detecting exotic particles in cosmic rays and in collisions at brand new accelerators in the United States, and the resulting menagerie seemed to defy all logic. Based on these successive discoveries, theorists established the quark model, which explained the existence of this multitude of exotic particles. Building on the theoretical advances of previous decades, they developed the Standard Model, providing a theoretical framework for particles and the forces that bind them together. This model proved to be extraordinarily effective, enabling physicists to make precise predictions about the interactions between particles, which were subsequently validated by experiments at accelerators.

The CERN Theory group and CERN’s experiments made many contributions to building and verifying the Standard Model throughout the 1970s, for example with the discovery of neutral currents in 1973. They helped to consolidate the model in the 1980s, notably with the discovery of the intermediate W and Z bosons and the numerous results from the Large Electron–Positron Collider (LEP). At the end of the 1990s, the particle landscape appeared to be well organised, with a coherent classification system and established and verified equations.

However, the Brout-Englert-Higgs mechanism, on which the Standard Model is based and through which particles acquire their mass, had not yet been validated. When the Higgs boson was discovered at the Large Hadron Collider (LHC) in 2012, the final piece of the Standard Model puzzle fell into place.

Was the quest over? The LHC had answered the crucial question of the Brout-Englert-Higgs mechanism and made incredibly precise measurements. But the Standard Model theory, powerful as it was, had many gaps. The community hoped that the unprecedented energy of the LHC would reveal other particles that were predicted by theories beyond the Standard Model or, at least, that anomalies would indicate the existence of new physics. Some of these theories have since been ruled out and measurements continue.

In the absence of new clues, particle physics is at a crossroads, and there are many possible paths to be followed. The High-Luminosity LHC, which will start up in 2030, will continue the exploration, providing experimentalists with a very large volume of data. For the longer term, the community is designing instruments that could reach higher energies and thus enable unknown areas to be explored. For example, the FCC study is examining the feasibility of a more powerful collider. All of the options are being studied in the framework of the European Strategy for Particle Physics. The quest to understand the Universe at the smallest and largest scales therefore continues.

Recollections

When I arrived at CERN, I had high hopes for a revolutionary breakthrough to take place at LEP or the LHC. In the ensuing years, the LHC indeed triggered a revolution in particle physics, but not of the kind I was expecting.

Gian Giudice

Gian Giudice is Head of CERN’s Theoretical Physics department.

“In her speech at the CERN70 ceremony, Ursula von der Leyen said: "Every physicist in the world wants to work at CERN." After spending nearly 32 years at this Lab, I know exactly what she meant. My time at CERN has been the most fantastic experience of my life, from both a scientific and a human point of view.

At the time I joined CERN as a fellow in 1993, particle physics had just gone through a heroic period. In the previous 30 years, knowledge of fundamental physics had been transformed from a confused zoology of particles into a precise and successful description based on a single mathematical principle: gauge symmetry in quantum field theory. LEP had just conclusively established this principle as the ultimate law in particle physics. Notwithstanding this impressive result, the Standard Model (SM) had limitations. The agreement with experimental data was perfect, but there were too many unanswered questions, mostly related to the structural aspects of the theory and to cosmological puzzles. The general feeling was that many of these questions would find their answers in the next layer of physical reality, ready to be discovered in the following 30 years.

When I arrived at CERN, I had high hopes for a revolutionary breakthrough to take place at LEP or the LHC. In the ensuing years, the LHC indeed triggered a revolution in particle physics, but not of the kind I was expecting. On one hand, the discovery of the Higgs boson proved that the spontaneous breaking of electroweak symmetry – already identified by LEP – is completed at short distances by a single spin-zero particle. On the other hand, the lack of any other particles or unexpected phenomena at the LHC showed that there must exist an energy gap between the SM and the next layer of microscopic reality. These two results represent the legacy of the LHC, whose consequence was to completely reshuffle the cards in particle physics, leaving us today even more puzzled about the SM than we were before the project started.

The conceptual revolution triggered by the LHC is a measure of the success of the scientific method. Research in fundamental physics is a combination of theoretical speculations and experimental exploration. More often than not, it leads to surprises, and this has certainly been the case for the LHC results.

The LHC discoveries of the Higgs boson and the new-physics energy gap exemplify the power of the scientific method. The precision reached in these measurements was astounding and, in many cases, exceeded anything that could have been imagined at the beginning of the project. The secret behind this success was not only the superb performance of the CERN accelerator complex and the LHC detectors, but also the advances in the theoretical calculations of the SM backgrounds. Only with all these accomplishments put together was it possible to extract precision measurements out of hadron-collider data.

The interplay between theory and experiment has always been the key to CERN's success. Most of the LEP achievements would not have been possible without an intense theory/experiment collaboration. The LHC has continued on LEP's path, bringing the symbiotic theory/experiment relationship to an unprecedented level of sophistication, as I have witnessed during my 30 years at CERN.

What lies ahead in the next 30 years? Many of the questions that faced particle physics when I arrived at CERN are still unanswered, and the discoveries of the Higgs boson and the energy gap have only sharpened the meaning of those questions and made more urgent the need to find answers. In the meantime, the landscape of research in fundamental physics has broadened beyond traditional boundaries. To tackle the open questions in fundamental physics we need today a diverse experimental programme, which includes large and small projects, involving different goals and techniques and bridging fields. A post-LHC collider project is essential to spearhead research towards shorter distances, and CERN must fulfil its leadership role in particle physics by developing and operating a new ambitious high-energy project during the next 30 years.

Although theory and experiment are always intricately connected in the advancement of fundamental physics, in recent decades experiments had a predominant role, thanks to the impact of the LHC results. However, my expectation is that the pendulum will swing back, and theory will be the driving force of the next 30 years.

Theorist Freeman Dyson claimed that concept-driven scientific revolutions are highly celebrated by historians but are actually relatively rare. Along the path of scientific progress, the most common revolutions – Dyson argued – are tool-driven. Obvious examples are the discovery of the Medicean stars orbiting around Jupiter, made possible by Galileo's telescope, and the discovery of the Higgs boson, which could not have been made without the LHC. But mathematical tools are as important as scientific instruments to trigger revolutions. Examples are infinitesimal calculus, which provided the necessary tool for Newton to understand that elliptical planetary orbits are compatible with a spherically-symmetric central force, and differential geometry, which provided the right set-up to formulate general relativity.

My bet is that the tool to profoundly change how theoretical physics develops in the next 30 years will be artificial intelligence (AI). AI is already revolutionising all aspects of experimental data analysis but it could also transform how theoretical physics operates. Moreover, understanding how AI solves physics problems may reveal new logical frameworks, well beyond the methods currently used by humans. The whole meaning and definition of theoretical physics is likely to change in the next 30 years and this will open new horizons to scientific exploration.

---

Gian was a speaker at the first CERN70 public event “Unveiling the Universe”, and additional CERN70 events explored the case of the (still) mysterious Universe and exploring farther with machines for new knowledge.